“Dost thou think, because thou art virtuous, there shall be no more cakes and ale?”

— William Shakespeare, Twelfth Night.

Experts on nutrition are like experts on sexuality. No matter how professional they are in general, in some way they are always trying to justify their own lifestyle. They share a tendency to think that their own lifestyle is the one that everybody else should follow and they are always eager to save us from our own sins, sexual or dietary. The new puritans want to save us from red meat. It is unknown whether Michael Pollan’s In Defense of Food was reporting the news or making the news but it’s coupling of not eating too much and not eating meat is common. More magazine’s take on saturated fat was very sympathetic to my own point of view and I probably shouldn’t complain that tacked on at the end was the conclusion that “most physicians will probably wait for more research before giving you carte blanche to order juicy porterhouse steaks.” I’m not sure that my physician knows about the research that already exists or that I am waiting for his permission on a zaftig steak.

“Daily Red Meat Raises Chances Of Dying Early” was the headline in the Washington Post last year. This scary story was accompanied by the photo below. The gloved hand slicing roast beef with a scalpel-like instrument was probably intended to evoke CSI autopsy scenes, although, to me, the beef still looked pretty good if slightly over-cooked. I don’t know the reporter, Rob Stein, but I can’t help feeling that we’re not talking Woodward and Bernstein here. For those too young to remember Watergate, the reporters from the Post were encouraged to “follow the money” by Deep Throat, their anonymous whistle-blower. A similar character, claiming to be an insider and identifying himself or herself as “Fat Throat,” has been sending intermittent emails to bloggers, suggesting that they “follow the data.”

The Post story was based on a research report “Meat Intake and Mortality” published in the medical journal, Archives of Internal Medicine by Sinha and coauthors. It got a lot of press and had some influence and recently re-surfaced in the Harvard Men’s Health Watch in a two part article called, incredibly enough, “Meat or beans: What will you have?” (The Health Watch does admit that “red meat is a good source of iron and protein and…beans can trigger intestinal gas” and that they are “very different foods”) but somehow it is assumed that we can substitute one for the other.

Let me focus on Dr. Sinha’s article and try to explain what it really says. My conclusion will be that there is no reason to think that any danger of red meat has been demonstrated and I will try to point out some general ways in which one can deal with these kinds of reports of scientific information.

A few points to remember first. During the forty years that we describe as the obesity and diabetes epidemic, protein intake has been relatively constant; almost all of the increase in calories has been due to an increase in carbohydrates; fat, if anything, went down. During this period, consumption of almost everything increased. Wheat and corn, of course went up. So did fruits and vegetables and beans. The two things whose consumption went down were red meat and eggs. In other words there is some a priori reason to think that red meat is not a health risk and that the burden of proof should be on demonstrating harm. Looking ahead, the paper, like analysis of the population data, will rely entirely on associations.

The conclusion of the study was that “Red and processed meat intakes were associated with modest increases in total mortality, cancer mortality, and cardiovascular disease mortality.” Now, modest increase in mortality is a fairly big step down from “Dying Early,” and surely a step-down from the editorial quoted in the Washington Post. Written by Barry Popkin, professor of global nutrition at the University of North Carolina it said: “This is a slam-dunk to say that, ‘Yes, indeed, if people want to be healthy and live longer, consume less red and processed meat.'” Now, I thought that the phrase “slam-dunk” was pretty much out after George Tenet, then head of the CIA, told President Bush that the Weapons of Mass Destruction in Iraq was a slam-dunk. (I found an interview with Tenet after his resignation quite disturbing; when the director of the CIA can’t lie convincingly, we are in big trouble). And quoting Barry Popkin is like getting a second opinion from a member of the “administration.” It’s definitely different from investigative reporting like, you know, reading the article.

So what does the research article really say? As I mentioned in my blog on eggs, when I read a scientific paper, I look for the pictures. The figures in a scientific paper usually make clear to the reader what is going on — that is the goal of scientific communication. But there are no figures. With no figures, Dr. Sinha’s research paper has to be analyzed for what it does have: a lot of statistics. Many scientists share Mark Twain’s suspicion of statistics, so it is important to understand how it is applied. A good statistics book will have an introduction that says something like “what we do in statistics, is try to put a number on our intuition.” In other words, it is not really, by itself, science. It is, or should be, a tool for the experimenter’s use. The problem is that many authors of papers in the medical literature allow statistics to become their master rather than their servant: numbers are plugged into a statistical program and the results are interpreted in a cut-and-dried fashion with no intervention of insight or common sense. On the other hand, many medical researchers see this as an impartial approach. So let it be with Sinha.

What were the outcomes? The study population of 322, 263 men and 223, 390 women was broken up into five groups (quintiles) according to meat consumption, the highest taking in about 7 times as much as the lower group (big differences). The Harvard News Letter says that the men who ate the most red meat had a 31 % higher death rate than the men who ate the least meat. This sounds serious but does it tell you what you want to know? In the media, scientific results are almost universally reported this way but it is entirely misleading. (Bob has 30 % more money than Alice but they may both be on welfare). To be fair, the Abstract of the paper itself reported this as a hazard ratio of 1.31 which, while still misleading, is less prejudicial. Hazard ratio is a little bit complicated but, in the end, it is similar to odds ratio or risk ratio which is pretty much what you think: an odds ratio of 2 means you’re twice as likely to win with one strategy as compared to the other. A moment’s thought tells you that this is not good information because you can get an odds ratio of 2, that is, you can double your chances of winning the lottery, by buying two tickets instead of one. You need to know the actual odds of each strategy. Taking the ratio hides information. Do reporters not know this? Some have told me they do but that their editors are trying to gain market share and don’t care. Let me explain it in detail. If you already understand, you can skip the next paragraph.

A trip to Las Vegas

Taking the hazard ratio as more or less the same as odds ratio or risk ratio, let’s consider applying odds (in the current case, they are very similar). So, we are in Las Vegas and it turns out that there are two black-jack tables and, for some reason (different number of decks or something), the odds are different at the two tables (odds are ways of winning divided by ways of not winning). Table 1 pays out on average once every 100 hands. Table 2 pays out once in 67 hands. The odds are 1/99 or close to one in a hundred at the first table and 1/66 at the second. The odds ratio is, obviously the ratio of the two odds or 1/66 divided by 1/99 or about 1.55. (The odds ratio would be 1 if there were no difference between the two tables).

Right off, something is wrong: if you were just given the odds ratio you would have lost some important information. The odds ratio tells you that one gambling table is definitely better than the other but you need additional information to find out that the odds aren’t particularly good at either table: technically, information about the absolute risk was lost.

So knowing the odds ratio by itself is not much help. But since we know the absolute risk of each table, does that help you decide which table to play? Well, it depends who you are. For the guy who is at the blackjack table when you go up to your hotel room to go to sleep and who is still sitting there when you come down for the breakfast buffet, things are going to be much better off at the second table. He will play hundreds of hands and the better odds ratio of 1.5 will pay off in the long run. Suppose, however, that you are somebody who will take the advice of my cousin the statistician who says to just go and play one hand for the fun of it, just to see if the universe really loves you (that’s what gamblers are really trying to find out). You’re going to play the hand and then, win or lose, you are going to go do something else. Does it matter which table you play at? Obviously it doesn’t. The odds ratio doesn’t tell you anything useful because you know that your chances of winning are pretty slim either way.

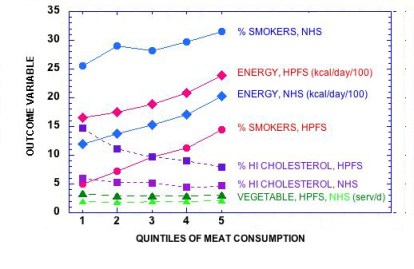

Now going over to the Red Meat article the hazard ratio (again, roughly the odds ratio) between high and low red meat intakes for all-cause mortality for men, for example, is 1.31 or, as they like to report in the media 31 % higher risk of dying which sounds pretty scary. But what is the absolute risk? To find that we have to find the actual number of people who died in the high red meat quintile and the low end quintile. This is easy for the low end: 6,437 people died from the group of 64,452, so the probability (probability is ways of winning divided by total possibilities) of dying are 6,437/64,452 or just about 0.10 or 10 %. It’s a little trickier for the high red meat consumers. There, 13,350 died. Again, dividing that by the number in that group, we find an absolute risk of 0.21 or 21 % which seems pretty high and the absolute difference in risk is an increase of 10 % which still seems pretty significant. Or is it? In these kinds of studies, you have to ask about confounders, variables that might bias the results. Well, here, it is not hard to find. Table 1 reveals that the high red meat group had 3 times the number of smokers. (Not 31 % more but 3 times more). So the authors corrected the data for this and other effects (education, family history of cancer, BMI, etc.) which is how the final a value of 1.31 was obtained. Since we know the absolute value of risk in the lowest red meat group, 0.1 we can calculate the risk in the highest red meat group which will be 0.131. The absolute increase in risk from eating red meat, a lot more red meat, is then 0.131 – 0.10 = 0.031 or 3.1 % which is quite a bit less than we thought.

Now, we can see that the odds ratio of 1.31 is not telling us much — and remember this is for big changes, like 6 or 7 times as much meat; doubling red meat intake (quintiles 1 and 2) leads to a hazard ratio of 1.07. What is a meaningful odds ratio? For comparison, the odds ratio for smoking vs not smoking for incidence of lung disease is about 22.

Well, 3.1 % is not much but it’s something. Are we sure? Remember that this is a statistical outcome and that means that some people in the high red meat group had lower risk, not higher risk. In other words, this is what is called statistically two-tailed, that is, the statistics reflect changes that go both ways. What is the danger in reducing meat intake. The data don’t really tell you that. Unlike cigarettes, where there is little reason to believe that anybody’s lungs really benefit from cigarette smoke (and the statistics are due to random variation), we know that there are many benefits to protein especially if it replaces carbohydrate in the diet, that is, the variation may be telling us something real. With odds ratios around 1.31 — again, a value of 1 means that there is no difference — you are almost as likely to benefit from adding red meat as you are reducing it. The odds still favor things getting worse but it really is a risk in both directions. You are at the gaming tables. You don’t get your chips back. If reducing red meat does not reduce your risk, it may increase it. So much for the slam dunk.

What about public health? Many people would say that for a single person, red meat might not make a difference but if the population reduced meat by half, we would save thousands of lives. The authors do want to do this. At this point, before you and your family take part in a big experiment to save health statistics in the country, you have to ask how strong the relations are. To understand the quality of the data, you must look for things that would not be expected to have a correlation. “There was an increased risk associated with death from injuries and sudden death with higher consumption of red meat in men but not in women.” The authors dismiss this because the numbers were smaller (343 deaths) but the whole study is about small differences and it sounds like we are dealing with a good deal of randomness. Finally, the authors set out from the start to investigate red meat. To be fair, they also studied white meat which was slightly beneficial. But what are we to compare the meat results to? Why red meat? What about potatoes? Cupcakes? Breakfast cereal? Are these completely neutral? If we ran these through the same computer, what would we see? And finally there is the elephant in the room: carbohydrate. Basic biochemistry suggests that a roast beef sandwich may have a different effect than roast beef in a lettuce wrap.

So I’ve given you the perspective of a biochemistry professor. This was a single paper and surely not the worst, but I think it’s not really about science. It’s about sin.

*

Nutrition & Metabolism Society