“…789 deaths were reported in Doll and Hill’s original cohort. Thirty-six of these were attributed to lung cancer. When these lung cancer deaths were counted in smokers versus non-smokers, the correlation virtually sprang out: all thirty-six of the deaths had occurred in smokers. The difference between the two groups was so significant that Doll and Hill did not even need to apply complex statistical metrics to discern it. The trial designed to bring the most rigorous statistical analysis to the cause of lung cancer barely required elementary mathematics to prove his point.”

Siddhartha Mukherjee —The Emperor of All Maladies.

Scientists don’t like philosophy of science. It is not just that pompous phrases like hypothetico-deductive systems are such a turn-off but that we rarely recognize it as what we actually do. In the end, there is no definition of science and it is hard to generalize about actual scientific behavior. It’s a human activity and precisely because it puts a premium on creativity, it defies categorization. As the physicist Steven Weinberg put it, echoing Justice Stewart on pornography:

“There is no logical formula that establishes a sharp dividing line between a beautiful explanatory theory and a mere list of data, but we know the difference when we see it — we demand a simplicity and rigidity in our principles before we are willing to take them seriously [1].”

A frequently stated principle is that “observational studies only generate hypotheses.” The related idea that “association does not imply causality” is also common, usually cited by those authors who want you to believe that the association that they found does imply causality. These ideas are not right or, at least, they insufficiently recognize that scientific experiments are not so easily wedged into categories like “observational studies.” The principles are also invoked by bloggers and critics to discredit the continuing stream of observational studies that make an association between their favorite targets, eggs, red meat, sugar-sweetened soda and a metabolic disease or cancer. In most cases, the studies are getting what they deserve but the bills of indictment are not quite right. It is usually not simply that they are observational studies but rather that they are bad observational studies and, in any case, the associations are so weak that it is reasonable to say that they are an argument for a lack of causality. On the assumption that good experimental practice and interpretation can be even roughly defined, let me offer principles that I think are a better representation, insofar as we can make any generalization, of what actually goes on in science:

Observations generate hypotheses.

Observational studies test hypotheses.

Associations do not necessarily imply causality.

In some sense, all science is associations.

Only mathematics is axiomatic.

If you notice that kids who eat a lot of candy seem to be fat, or even if you notice that candy makes you yourself fat, that is an observation. From this observation, you might come up with the hypothesis that sugar causes obesity. A test of your hypothesis would be to see if there is an association between sugar consumption and incidence of obesity. There are various ways — the simplest epidemiologic approach is simply to compare the history of the eating behavior of individuals (insofar as you can get it) with how fat they are. When you do this comparison you are testing your hypothesis. There are an infinite number of things that you could have measured as an independent variable, meat, TV hours, distance from the French bakery but you have a hypothesis that it was candy. Mike Eades described falling asleep as a child by trying to think of everything in the world. You just can’t test them all. As Einstein put it “your theory determines the measurement you make.”

Associations predict causality. Hypotheses generate observational studies, not the other way around.

In fact, association can be strong evidence for causation and frequently provide support for, if not absolute proof, of the idea to be tested. A correct statement is that association does not necessarily imply causation. In some sense, all science is observation and association. Even thermodynamics, that most mathematical and absolute of sciences, rests on observation. As soon as somebody observes two systems in thermal equilibrium with a third but not with each other (zeroth law), the jig is up. When somebody builds a perpetual motion machine, that’s it. It’s all over.

Biological mechanisms, or perhaps any scientific theory, are never proved. By analogy with a court of law, you cannot be found innocent, only not guilty. That is why excluding a theory is stronger than showing consistency. The grand epidemiological study of macronutrient intake vs diabetes and obesity shows that increasing carbohydrate is associated with increased calories even under conditions where fruits and vegetables also went up and fat, if anything went down. It is an observational study but it is strong because it gives support to a lack of causal effect of increased carbohydrate and decreased fat on outcome. The failure of total or saturated fat to have any benefit is the kicker here. It is now clear that prospective experiments have, in the past, and will continue to show, the same negative outcome. Of course, in a court of law, if you are found not guilty of child abuse, people may still not let you move into their neighborhood. It is that saturated fat should never have been indicted in the first place.

An association will tell you about causality 1) if the association is strong and 2) if there is a plausible underlying mechanism and 3) if there is no more plausible explanation — for example, countries with a lot of TV sets have modern life styles that may predispose to cardiovascular disease; TV does not cause CVD.

Re-inventing the wheel. Bradford Hill and the history of epidemiology.

Everything written above is true enough or, at least, it seemed that way to me. I thought of it as an obvious description of what everybody knows. The change to saying that “association does not necessarily imply causation” is important but not that big a deal. It is common sense or logic and I had made it into a short list of principles. It was a blogpost of reasonable length. I described it to my colleague Gene Fine. His response was “aren’t you re-inventing the wheel?” Bradford Hill, he explained, pretty much the inventor of modern epidemiology, had already established these and a couple of other principles. Gene cited The Emperor of All Maladies, an outstanding book on the history of cancer. I had read The Emperor of All Maladies on his recommendation and I remembered Bradford Hill and the description of the evolution of the ideas of epidemiology, population studies and random controlled trials. I also had a vague memory, of reading the story in James LeFanu’s The Rise and Fall of Modern Medicine, another captivating history of medicine. However, I had not really absorbed these as principles. Perhaps we’re just used to it, but saying that an association implies causality only if it is a strong association is not exactly a scientific breakthrough. It seems an obvious thing that you might say over coffee or in response to somebody’s blog. It all reminded me of learning, in grade school, that the Earl of Sandwich had invented the sandwich and thinking “this is an invention?” Woody Allen thought the same thing and wrote the history of the sandwich and the Earl’s early failures — “In 1741, he places bread on bread with turkey on top. This fails. In 1745, he exhibits bread with turkey on either side. Everyone rejects this except David Hume.”

At any moment in history our background knowledge — and accepted methodology — may be limited. Some problems seem to have simple solutions. But simple ideas are not always accepted. The concept of the random controlled trial (RCT), obvious to us now, was hard won and, proving that any particular environmental factor — diet, smoking, pollution or toxic chemicals was the cause of a disease and that, by reducing that factor, the disease could be prevented, turned out to be a very hard sell, especially to physicians whose view of disease may have been strongly colored by the idea of an infective agent.

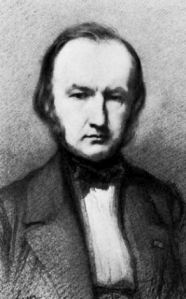

The Rise and Fall of Modern Medicine describes Bradford Hill’s two demonstrations that streptomycin in combination with PAS (para-aminosalicylic acid) could cure tuberculosis and that tobacco causes lung cancer as one of the Ten Definitive Moments in the history of modern medicine (others shown in the textbox). Hill was Professor of Medical Statistics at the London School of Hygiene and Tropical Medicine but was not formally trained in statistics and, like many of us, thought of proper statistics as common sense. An early near fatal case of tuberculosis also prevented formal medical education. His first monumental accomplishment was, ironically, to demonstrate how tuberculosis could be cured with the combination of streptomycin and PAS. In 1941, Hill and co-worker Richard Doll undertook a systematic investigation of the risk factors for lung cancer. His eventual success was accompanied by a description of the principles that allow you to say when association can be taken as causation.

The Rise and Fall of Modern Medicine describes Bradford Hill’s two demonstrations that streptomycin in combination with PAS (para-aminosalicylic acid) could cure tuberculosis and that tobacco causes lung cancer as one of the Ten Definitive Moments in the history of modern medicine (others shown in the textbox). Hill was Professor of Medical Statistics at the London School of Hygiene and Tropical Medicine but was not formally trained in statistics and, like many of us, thought of proper statistics as common sense. An early near fatal case of tuberculosis also prevented formal medical education. His first monumental accomplishment was, ironically, to demonstrate how tuberculosis could be cured with the combination of streptomycin and PAS. In 1941, Hill and co-worker Richard Doll undertook a systematic investigation of the risk factors for lung cancer. His eventual success was accompanied by a description of the principles that allow you to say when association can be taken as causation.

Ten Definitive Moments from Rise and Fall of Modern Medicine.

1941: Penicillin

1949: Cortisone

1950: streptomycin, smoking and Sir Austin Bradford Hill

1952: chlorpromazine and the revolution in psychiatry

1955: open-heart surgery – the last frontier

1963: transplanting kidneys

1964: the triumph of prevention – the case of strokes

1971: curing childhood cancer

1978: the first ‘Test-Tube’ baby

1984: Helicobacter – the cause of peptic ulcer

Wiki says: “in 1965, built upon the work of Hume and Popper, Hill suggested several aspects of causality in medicine and biology…” but his approach was not formal — he never referred to his principles as criteria — he recognized them as common sense behavior and his 1965 presentation to the Royal Society of Medicine, is a remarkably sober, intelligent document. Although described as an example of an article that, as here, has been read more often in quotations and paraphrases, it is worth reading the original even today.

Note: “Austin Bradford Hill’s surname was Hill and he always used the name Hill, AB in publications. However, he is often referred to as Bradford Hill. To add to the confusion, his friends called him Tony.” (This comment is from Wikipedia, not Woody Allen).

The President’s Address

Bradford Hill’s description of the factors that might make you think an association implied causality:

1. Strength. “First upon my list I would put the strength of the association.” This, of course, is exactly what is missing in the continued epidemiological scare stories. Hill describes

“….prospective inquiries into smoking have shown that the death rate from cancer of the lung in cigarette smokers is nine to ten times the rate in non-smokers and the rate in heavy cigarette smokers is twenty to thirty times as great.”

But further:

“On the other hand the death rate from coronary thrombosis in smokers is no more than twice, possibly less, the death rate in nonsmokers. Though there is good evidence to support causation it is surely much easier in this case to think of some features of life that may go hand-in-hand with smoking – features that might conceivably be the real underlying cause or, at the least, an important contributor, whether it be lack of exercise, nature of diet or other factors.”

Doubts about an odds ratio of two or less. That’s where you really have to wonder about causality. The progression of epidemiologic studies that tell you red meat, HFCS, etc. will cause diabetes, prostatic cancer, or whatever, these rarely hit an odds ratio of 2. While the published studies may contain disclaimers of the type in Hill’s paper, the PR department of the university where the work is done, and hence the public media, show no such hesitation and will quickly attribute causality to the study as if the odds ratio were 10 instead of 1.2.

2. Consistency: Hill listed the repetition of the results in other studies under different circumstances as a criterion for considering how much an association implied causality. Not mentioned but of great importance, is that this test cannot be made independent of the first criterion. Consistently weak associations do not generally add up to a strong association. If there is a single practice in modern medicine that is completely out of whack with respect to careful consideration of causality, it is the meta-analysis where studies with no strength at all are averaged so as to create a conclusion that is stronger than any of its components.

3. Specificity. Hill was circumspect on this point, recognizing that we should have an open mind on what causes what. On specificity of cancer and cigarettes, Hill noted that the two sites in which he showed a cause and effect relationship were the lungs and the nose.

4. Temporality: Obviously, we expect the cause to precede the effect or, as some wit put it “which got laid first, the chicken or the egg.” Hill recognized that it was not so clear for diseases that developed slowly. “Does a particular diet lead to disease or do the early stages of the disease lead to those peculiar dietetic habits?” Of current interest are the epidemiologic studies that show a correlation between diet soda and obesity which are quick to see a causal link but, naturally, one should ask “Who drinks diet soda?”

5. Biological gradient: the association should show a dose response curve. In the case of cigarettes, the death rate from cancer of the lung increases linearly with the number of cigarettes smoked. A subset of the first principle, that the association should be strong, is that the dose-response curve should have a meaningful slope and, I would add, the numbers should be big.

6. Plausibilityy: On the one hand, this seems critical — the association of egg consumption with diabetes is obviously foolish — but the hypothesis to be tested may have come from an intuition that is far from evident. Hill said, “What is biologically plausible depends upon the biological knowledge of the day.”

7. Coherence: “data should not seriously conflict with the generally known facts of the natural history and biology of the disease”

8. Experiment: It was another age. It is hard to believe that it was in my lifetime. “Occasionally it is possible to appeal to experimental, or semi-experimental, evidence. For example, because of an observed association some preventive action is taken. Does it in fact prevent?” The inventor of the random controlled trial would be amazed how many of these are done, how many fail to prevent. And, most of all, he would have been astounded that it doesn’t seem to matter. However, the progression of failures, from Framingham to the Women’s Health Initiative, the lack of association between low fat, low saturated fat and cardiovascular disease, is strong evidence for the absence of causation.

9. Analogy: “In some circumstances it would be fair to judge by analogy. With the effects of thalidomide and rubella before us we would surely be ready to accept slighter but similar evidence with another drug or another viral disease in pregnancy.”

Hill’s final word on what has come to be known as his criteria for deciding about causation:

“Here then are nine different viewpoints from all of which we should study association before we cry causation. What I do not believe — and this has been suggested — is that we can usefully lay down some hard-and-fast rules of evidence that must be obeyed before we accept cause and effect. None of my nine viewpoints can bring indisputable evidence for or against the cause-and-effect hypothesis and none can be required as a sine qua non. What they can do, with greater or less strength, is to help us to make up our minds on the fundamental question – is there any other way of explaining the set of facts before us, is there any other answer equally, or more, likely than cause and effect?” This may be the first critique of the still-to-be-invented Evidence-based Medicine.

Nutritional Epidemiology.

The decision to say that an observational study implies causation is equivalent to an assertion that the results are meaningful, that it is not a random association at all, that it is scientifically sound. Critics of epidemiological studies have relied on their own perceptions and appeal to common sense and when I started this blogpost, I was one of them, and I had not appreciated the importance of Bradford Hill’s principles. The Emperor of All Maladies described Hill’s strategies for dealing with association and causation “which have remained in use by epidemiologists to date.” But have they? The principles are in the texts. Epidemiology, Biostatistics, and Preventive Medicine has a chapter called “The study of causation in Epidemiologic Investigation and Research” from which the dose-response curve was modified. Are these principles being followed? Previous posts in this blog and others have have voiced criticisms of epidemiology as it’s currently practiced in nutrition but we were lacking a meaningful reference point. Looking back now, what we see is a large number of research groups doing epidemiology in violation of most of Hill’s criteria.

The red meat scare of 2011 was Pan, et al and I described in a previous post, the remarkable blog from Harvard . Their blog explained that the paper was unnecessarily scary because it had described things in terms of “relative risks, comparing death rates in the group eating the least meat with those eating the most. The absolute risks… sometimes help tell the story a bit more clearly. These numbers are somewhat less scary.” I felt it was appropriate to ask “Why does Dr. Pan not want to tell the story as clearly as possible? Isn’t that what you’re supposed to do in science? Why would you want to make it scary?” It was, of course, a rhetorical question.

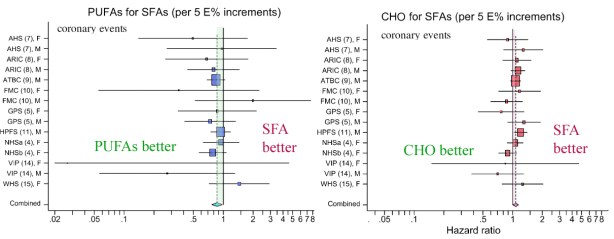

Looking at Pan, et al. in light of Bradford Hill, we can examine some of their data. Figure 2 from their paper shows the risk of diabetes as a function of red meat in the diet. The variable reported is the hazard ratio which can be considered roughly the same as the odds ratio, that is, relative odds of getting diabetes. I have indicated, in pink, those values that are not statistically significant and I grayed out the confidence interval to make it easy to see that these do not even hit the level of 2 that Bradford Hill saw as some kind of cut-off.

The hazard ratios for processed meat are somewhat higher but still less than 2. This is weak data and violates the first and most important of Hill’s criteria. As you go from quartile 2 to 3, there is an increase in risk, but at Q4, the risk goes down and then back up at Q5, in distinction to principle 5 which suggests the importance of dose-response curves. But, stepping back and asking what the whole idea is, asking why you would think that meat has a major — and isolatable role separate from everything else — in a disease of carbohydrate intolerance, you see that this is not rational, this is not science. And Pan is not making random observations. This is a test of the hypothesis that red meat causes diabetes. Most of us would say that it didn’t make any sense to test such a hypothesis but the results do not support the hypothesis.

What is science?

Science is a human activity and what we don’t like about philosophy of science is that it is about the structure and formalism of science rather than what scientists really do and so there aren’t even any real definitions. One description that I like, from a colleague at the NIH: “What you do in science, is you make a hypothesis and then you try to shoot yourself down.” One of the more interesting sidelights on the work of Hill and Doll, as described in Emperor, was that during breaks from the taxing work of analyzing the questionnaires that provided the background on smoking, Doll himself would step out for a smoke. Doll believed that cigarettes were unlikely to be a cause — he favored tar from paved highways as the causative agent — but as the data came in, “in the middle of the survey, sufficiently alarmed, he gave up smoking.” In science, you try to shoot yourself down and, in the end, you go with the data.

Figure 1. Logarithm of the number of papers in PubMed search with the title containing “meta-analysis” vs. Year of publication

Figure 1. Logarithm of the number of papers in PubMed search with the title containing “meta-analysis” vs. Year of publication