…what metaphysics is to physics. The old joke came to mind when a reporter asked me yesterday to comment on a paper published in the BMJ. “Intake of saturated and trans unsaturated fatty acids and risk of all cause mortality, cardiovascular disease, and type 2 diabetes: systematic review and meta-analysis of observational studies” by de Souza, et al.

Now the title ““Intake of saturated and trans unsaturated fatty acids…” tells you right off that this is not good news; lumping together saturated fat and trans-fat represents a clear indication of bias. A stand-by of Atkins-bashers, it is a way of vilifying saturated fat when the data won’t fit. In the study that the reporter asked about, the BMJ provided a summary:

“There was no association between saturated fats and health outcomes in studies where saturated fat generally replaced refined carbohydrates, but there was a positive association between total trans fatty acids and health outcomes Dietary guidelines for saturated and trans fatty acids must carefully consider the effect of replacement nutrients.”

“But?” The two statements are not really connected. In any case the message is clear: saturated fat is Ok. Trans-fat is not Ok. So, we have to be concerned about both saturated fat and trans-fat. Sad.

And “systematic” means the system that the author wants to use. This usually means “we searched the database of…” and then a meta-analysis. Explained by the overly optimistic “What is …?” series:

The jarring notes are “precise estimate” in combination with “combining…independent studies.” In practice, you usually only repeat an experiment exactly if you suspect that something was wrong with the original study or if the result is sufficiently outside expected values that you want to check it. Such an examination sensibly involves a fine-grained analysis of the experimental details. The idea underlying the meta-analysis, however, usually unstated, is that the larger the number of subjects in a study, the more compelling the conclusion. One might make the argument, instead, that if you have two or more studies which are imperfect, combining them is likely to lead to greater uncertainty and more error, not less. I am one who would make such an argument. So where did meta-analysis come from and what, if anything, is it good for?

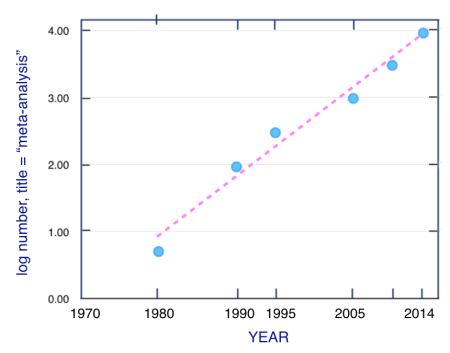

I am trained in enzyme and protein chemistry but I have worked in a number of different fields including invertebrate animal behavior. I never heard of meta-analysis until very recently, that is, until I started doing research in nutrition. In fact, in 1970 there weren’t any meta-analyses, at least not with that phrase in the title, or at least not as determined by my systematic PubMed search. By 1990, there were about a 100 and by 2014, there were close to 10, 000 (Figure 1).

Figure 1. Logarithm of the number of papers in PubMed search with the title containing “meta-analysis” vs. Year of publication

Figure 1. Logarithm of the number of papers in PubMed search with the title containing “meta-analysis” vs. Year of publication

This exponential growth suggests that the technique grew by reproducing itself. It suggests, in fact, that its origins are in spontaneous generation. In other words, it is popular because it is popular. (It does have obvious advantages; you don’t have to do any experiments). But does it give any useful information?

Meta-analysis

If you have a study that is under-powered, that is, if you only have a small number of subjects, and you find a degree of variability in the outcome, then combining the results from your experiment with another small study may point you to a consistent pattern. As such, it is a last-ditch, Hail-Mary kind of method. Applying it to large studies that have statistically meaningful results, however, doesn’t make sense, because:

- If all of the studies go in the same direction, you are unlikely to learn anything from combining them. In fact, if you come out with a value for the output that is different from the value from the individual studies, in science, you are usually required to explain why your analysis improved things. Just saying it is a larger n won’t cut it, especially if it is my study that you are trying to improve on.

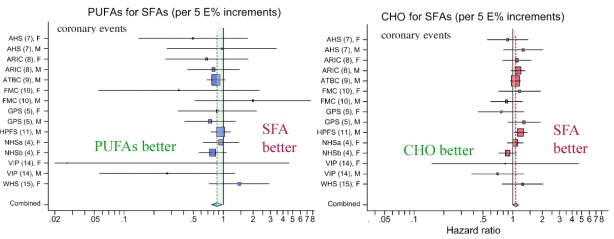

- In the special case where all the studies show no effect and you come up with a number that is statistically significant, you are, in essence saying that many wrongs can make a right as described in a previous blog post on abuse of meta-analyses. In that post, I re-iterated the statistical rule that if the 95% CI bar crosses the line for hazard ratio = 1.0 then this is taken as an indication that there is no significant difference between the two conditions that are being compared. The example that I gave was the meta-analysis by Jakobsen, et al. on the effects of SFAs or a replacement on CVD outcomes (Figure 2). Amazingly, in the list of 15 different studies that she used, all but one cross the hazard ratio = 1.0 line. In other words, only one study found that keeping SFAs in the diet provides a lower risk than replacement with carbohydrate. For all the others there was no significant difference. The question is why an analysis was done at all. What could we hope to find? How could 15 studies that show nothing add up to a new piece of information? Most amazing is that some of the studies are more than 20 years old. How could these have had so little impact on our opinion of saturated fat? Why did we keep believing that it was bad?

Figure 2. Hazard ratios and 95% confidence intervals for coronary events and deaths in the different studies in a meta-analysis from Jakobsen, et al.Major types of dietary fat and risk of coronary heart disease: a pooled analysis of 11 cohort studies. Am J Clin Nutr 2009, 89(5):1425-1432.

3. Finally, suppose that you are doing a meta-analysis on several studies and that they have very different outcomes, showing statistically significant associations in different directions. For example, if some studies showed substituting saturated fat for carbohydrate increased risk while some showed that it decreased risk. What will you gain by averaging them? I don’t know about you but it doesn’t sound good to me. It makes me think of the old story of the emerging nation that was planning to build a railroad and didn’t know whether to use a gauge that matched the country to the north or the gauge of the country to the south. The parliament voted to use a gauge that was the average of the two.

Hi Dr. Feinman, funny you should email… I am about to finish my case study.

I started to realize last fall I may be stable because I am controlling my fasting blood glucose… it turns out peripheral clocks can become misaligned and desynchronous with central circadian clocks. My regimen may align peripheral clocks, to allow fasting BG to be normalized as a symptom of enough clock synchrony to slow or prevent metabolic disease progression. Glycemic control during the day reinforces circadian controls, with in turn, reinforce the next days glycemic control.

I think I have a better idea how to help others now…by the way, certain fats alter liver clock (and increase my BG).

Let me know what you think? Kathleen Broomall

I think this is an important, under-investigated question. One good study by my colleague at Downstate http://www.ncbi.nlm.nih.gov/pmc/articles/PMC2925198/pdf/nihms225004.pdf

Kathleen, I am intrigued with this….could you share what your regimen is that may realign peripheral clocks?

Great post as usual. Clear and easy to understand. I’m still trying to understand that low carbohydrate and all cause mortality meta analysis study which is not referred to in all ‘reviews’ on low carb diets in the nutrition journals saying how bad they are. Does anyone understand it?

It is easy to understand if it is what I think it is and is included in today’s post.

I recently came across a study that makes me think they’ve been howling up the wrong tree all this time. I don’t have the link to hand (it was probably in JCEM) but in short, it found that in 50% of major coronary incidents examined for thyroid function, the victim was found to have a significant degree of T3 deficiency. The study went on to cite “flabby heart syndrome” as being a known consequence of hypothyroidism (and pointed out that unfortunately, the link between low thyroid and heart attacks isn’t something that can be ethically studied at the experimental level, so all we can do is observe and report).

This turns on its ear the “obesity causes heart disease” thing — maybe that’s not the case at all. Maybe low thyroid causes both (we know it can cause obesity, d’oh!), and there’s a mere correlation, not a causative relationship.

We also know that excess blood lipids is a consequence of low thyroid function, as is high blood pressure (both for biochemical imbalance and a tendency to remove calcium from the blood and deposit it where it doesn’t belong, such as arterial walls — reducing blood calcium levels also affects potassium levels).

80% of people over age 50 have some degree of impairment in conversion of T4 to T3; necropsies at one aged-care facility found goiters in 26% of patients. What’s your age-range for heart disease? Funny how it’s a match for this…

I put all this together and conclude the whole damned heart disease/high blood pressure/high cholesterol axis has squat to do with diet, and everything to do with T3 insufficiency, whether due to the natural decline of aging or the less-than-ideal range found in the “healthy” population (given that about 25% of adults show *some* clinical signs of sub-optimal thyroid function, albeit often mistaken for another chronic condition or just plain “getting old”).

If diet has anything to do with it at all, it’s that soy and aspartame are thyroid inhibitors.

Sorry for overlooking this interesting comment. I am not sure I can really comment on it beyond agreeing that diet and being overweight are unlikely to be major players for most people.

Dear Dr. Feinman,

So you’re a genius. Great.

Now do something useful and make Mrs. Feinman proud.

Tell us how enzymes affect constitutive inflammation and how we can deal with it.

Al

N.B. Your explanation of meta-analysis should be on page 1 of every medical school textbook. AL

Sorry for the delay in answering. Understanding inflammation and impressing Mrs. Feinman may be beyond my capabilities.

Thank you for exposure to a new line of thought on this approach.

There was a typo on my original blogpost. Correct:

• Cristi Vlad is running another promotion for low-carbohydrate books in Kindle version for $ 2.99. There are 8 authors and 11 books. One day only, Wednesday August 11, 2015. http://cristivlad.com/3dollarbooks/

Good analysis. Seems to me the only reason to do a meta-analysis would be if your small study showed a trend toward benefit or risk but it wasn’t statistically significant because of a small number of participants. The problem I find when reading meta-analyses is that often every study used at least slightly different study populations, different times, and different end points. And if they all used food questionnaires, I don’t waste my time reading them.

What you say is correct but suggests that the correct way to do a meta-analysis is to not do any statistical manipulations. Show that the results of different trial are similar (or not). That way, if they are similar, than “slightly different study populations, different times, and different end points” works for you. It says that the results cover different conditions. On food questionnaires you have to distinguish between recall — “how many eggs do you eat each week?” and record keeping “write down everything that you eat.” The former are very unreliable but the latter can be pretty good. In both case, of course, it depends on what the outcome is. If group A result is 10 x group B result then you can guess that the food records are useful, however much there may be error.

One problem is that even full-text reports often don’t give enough details to determine what the questionnaire was. They often just say, “intake was determine with food questionnaires.” Most studies don’t use record keeping. I’ve been writing down what I eat since 1996, when I suspected I was sensitive to wheat (I got heartburn if I had a piece of bread), and even with habit, I often forget to log a meal until later, or don’t log it if I eat 3 peanuts or a slice of cheese between meals.

I agree that what we want are results that are large enough that we don’t need statistical games to show effects. But the popular press reports the results of all studies in the same way, and that’s what the average citizen remembers. And study details are often behind paywalls, available only to rich people or those with academic affiliations, so science journalists can’t check the veracity of results and just report the authors’ conclusions.

That’s why blogs by people like you are so valuable.

You are too kind. Volek’s study on the people with metabolic syndrome is a classic. The food records are very complete (and of course, he knew when they were in ketosis) and the differences in weight loss were very large.

Moreover,

how can we interpret observational studies about saturated fat when among the main sources of saturated fat we find pizza, cakes, cookies, donuts, pies, crisps, cobblers, granola bars, pasta and candies?

Do we see in the studies the effect of the mozzarella cheese or the effect of the wheat in the pizza?

Is it possible to discern the specific effect of the saturated fat on a donut using statistics?

Do the results of the included studies apply to healthy sources of fat in the diet (e.g. meat, butter, coconut oil, etc.)

“The jarring notes are “precise estimate” in combination with “combining…independent studies.” That sentence caused this thought to appear in my mind. Cognitive dissonance provides clarity?.!?!…!.?

In nutrition, cognitive dissonance is the name of the game.

The jarring thing for me was “Meta-analysis is most often used to assess the clinical effectiveness of healthcare interventions; it does this by combining data from two or more randomized controlled trials.”

I guess that’s what you meant when you called “What is…” overly optimistic.

Regarding trans fats, an important consideration is the fact that they consist of trans oleic and trans linoleic acids in amounts proportional to the fatty acid profile of the un-hydrogenated parent oil. In truth, excessive trans fat consumption runs the same sort of risks associated with excessive polyunsaturated fat consumption because they are both highly reactive molecules subject lipid peroxidation.

Over the past decade or so, of all the articles that mention trans fats, I recall only one in which the author noted that trans fats consist of polyunsaturated and monounsaturated fatty acids.

Since soybean oil is the major oil used in frying and baking applications requiring a partially hydrogenated saturated fat replacement product, it might be useful to consider recent research findings involving soybean oil. “A diet high in soybean oil causes more obesity and diabetes than a diet high in fructose, a sugar commonly found in soda and processed foods, according to a new study. In the U.S. the consumption of soybean oil has increased greatly in the last four decades due to a number of factors, including results from studies in the 1960s that found a positive correlation between saturated fatty acids and the risk of cardiovascular disease.” http://www.sciencedaily.com/releases/2015/07/150722144640.htm

I find it peculiar that there is so very little interest in linoleic acid research. http://www.bmj.com/content/349/bmj.g7255/rapid-responses

It’s fascinating to see that the growth in the number of this type of analysis is almost perfectly exponential over time. As they compete for attention in publication-space they should eventually run out of lesser studies to feed on and become growth-limited.

Would they then become Nano-Meta-Analyses?

🙂

Ha. Like many organisms undergoing exponential growth we expect them to soon poison their own environment and diet.

The problem is not with the meta analysis, but with the adoption of an overly stringent criterion for statistical significance. A meta analysis should use a 50% CI, not 95% CI, which would result in a lot more significant findings.

Sort of disagree. The problem is the cut-and-dried application of arbitrary rules and especially hidden assumption that the bigger the n, the better the study. The real value of a meta-analysis is the collection of different papers that meet some criterion — I agree that broader is better if it fits the scientific idea. Then, if the studies go in a generally perceptible direction that suggests that there is a conclusion that is reasonable if not completely proven. If there is disagreement among the different studies than that is evidence for a controversial, unsettled issue. That’s it. If you don’t know, you don’t know. Physicians have to say something (and they like numbers) but they need to avoid confusing their best intuition with established science. Gene Fine and I had started a meta-analysis on low-carb weight loss. Our point was that, even beyond statistical significance, what is the probability that 10 out of 12 low-fat-low-carb comparisons would come out numerically in one direction, that is, if you think of them as Bernoulli trials, how many heads in a row will make you think the coin is crooked? It seemed low-carb usually wins. Trying to get a number out of that would not have been meaningful. There are many more trials and I think the result is the same but we decided that, if it is not a dead horse, it is one that we did not want to ride.

Oh, I agree with you, Richard. I was joking about changing the criterion (though they are in some sense arbitrary in terms of truth value—there’s nothing magical about p<.05 in terms of ultimate truth. It's just a useful indicator of likelihood of replication).

Ha. I guess in nutrition comments are like Woody Allen’s story that he was kidnapped and they sent a letter to his parents.

“We have kidnapped your son. We will kill him unless you send us a million dollars. This is not a joke. A joke is included so that you can tell the difference.”

[…] Erik Edlund föreslår en annan länk till Richard Feiman. […]

[…] mierda, juntando varios lo que tienes es mucha mierda. Negaré haberme expresado en esos términos. Richard Feinman lo expresa de forma más educada: “el meta-análisis es al análisis lo que la meta-física […]

A possible benefit from doing a meta-analysis if the trials are comparable is that the standard error of a mean base on a sample reduces with increasing sample size! Trouble with using statistics is that the conditions for whatever distribution is postulated are unlikely to be met ( random, independent, same probability etc)

agree and, in addition…”if the trials are comparable” is a really big if.